UPDATE: Nvidia Corp. has announced that its highly anticipated new Rubin data center products are set to revolutionize AI development, with customers expected to begin testing the technology soon. All six of the new Rubin chips have returned from manufacturing and successfully completed milestone tests, confirming they are on track for deployment later this year.

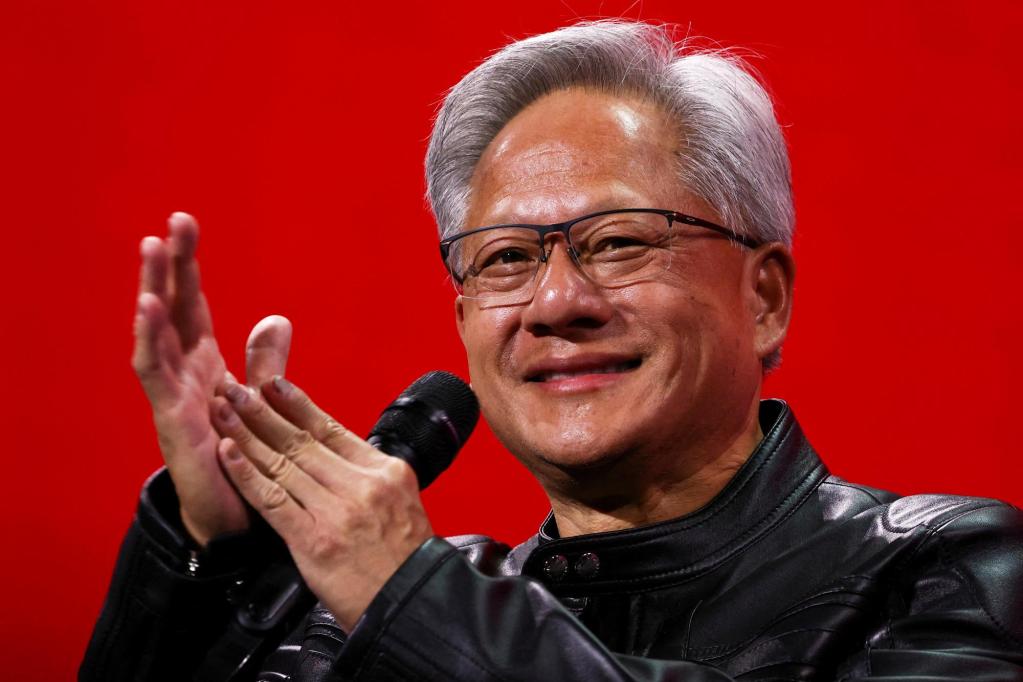

During a keynote presentation at the CES trade show in Las Vegas on January 8, 2024, CEO Jensen Huang stated, “The race is on for AI. Everybody’s trying to get to the next level.” This announcement underscores Nvidia’s commitment to maintaining its leadership in the artificial intelligence accelerator market, crucial for data center operators developing and executing AI models.

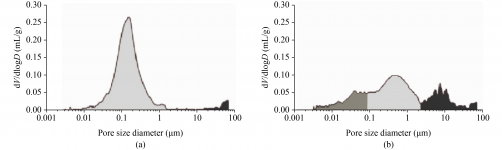

The Rubin chips are reported to be 3.5 times better at training AI and five times better at running AI software compared to their predecessor, Blackwell. Moreover, the new central processing unit features 88 cores, delivering twice the performance of the previous model. This leap in hardware capabilities is essential as AI continues to evolve, requiring more specialized networks capable of solving complex problems through multistage processes.

Nvidia is revealing product details earlier than usual, aiming to keep the industry engaged with its cutting-edge hardware, which has been pivotal in the recent surge of AI applications. Typically, Nvidia unveils such details at its spring GTC event in San Jose, California.

As Huang promotes the new technology, he faces increasing competition from rivals like Lisa Su of Advanced Micro Devices Inc., who is also set to deliver a keynote at CES. Concerns are mounting on Wall Street regarding the sustainability of AI spending, especially as data center operators begin developing their own AI accelerators. However, Nvidia remains optimistic, forecasting a total market value in the trillions of dollars.

The Rubin hardware will be integrated into the DGX SuperPod supercomputer but will also be available as standalone products, allowing customers to adopt a more modular approach. Importantly, Nvidia assures that Rubin-based systems will be more cost-effective than Blackwell versions, delivering the same results with fewer components.

Major players in the tech industry, including Microsoft Corp., Alphabet Inc.’s Google Cloud, and Amazon.com Inc.’s AWS, are expected to be among the first to deploy the new hardware in the second half of 2024. Currently, the bulk of spending on Nvidia-powered systems is driven by the capital expenditure budgets of these tech giants.

Nvidia is also expanding its portfolio with new tools designed to expedite the development of autonomous vehicles and robotics, further broadening the adoption of AI across various sectors, including healthcare and heavy industry.

As the AI landscape rapidly evolves, Nvidia’s Rubin chips represent a significant leap forward, promising to enhance efficiency and performance across the board. Stay tuned for more updates on this developing story.